The original objective of the Decisions Aids effort was to investigate the utility of using semi-automated decision aids in fielded operational systems. Additionally, it was to provide a proof-of-principle testbed to demonstrate methods of moving decision making technologies toward the users through the development, integration, and demonstration of algorithms that overcome the shortcomings of current mission planning, execution, and assessment processes.

Technology DescriptionThe Decision Aids project was orginally an initiative undertaken to investigate the using of semi-automated decision aids to assist the decision maker in real-time.

Technology NeedMission planning, execution, and assessment is being handled by smaller and smaller groups and are subjected to shorter timelines and overwhelming amounts of data. The diversity of missions that must be planned and the complexity of executing these missions, is increasing. In addition, the environment in which the missions take place is increasingly dynamic, thereby causing the objectives and effectiveness to change as the plan unfolds. The original plan may fail or require modification. For these reasons, the decision maker needs semi-automated help in developing plans, in monitoring plan execution and effectiveness as the mission progresses, and in updating the plan as necessary based on continuous situation assessment.

Decision Aids ObjectivesThe original objective of the Decisions Aids effort was to investigate the utility of using semi-automated decision aids in fielded operational systems. Additionally, it was to provide a proof-of-principle testbed to demonstrate methods of moving decision making technologies toward the users through the development, integration, and demonstration of algorithms that overcome the shortcomings of current mission planning, execution, and assessment processes. We also demonstrated the applicability of these algorithms and support tools in multiple mission domains.

An additional objective of this Decision Aids project was to implement and demonstrate an open, scaleable,

standards-compliant testbed with the flexibility to analyze and demonstrate applications in future multiple

mission domains. It established a platform for technology insertion and integration of advanced graphical,

visual, and algorithmic decision and planning processes, and provided a testbed to integrate Commerical-Off-The-Shelf

(COTS) and Goverenment-Off-The-Shelf (GOTS) products. The objective was intended to be implemented using modern

programming languages, practices, and procedures. The objectives included:

To satisfy evolving mission requirements, the next-generation of operational mission architectures must comply with these concepts and their accompanying emerging standards. These architectures must furnish a total life-cycle environment in which it is easy and inexpensive to integrate, verify, and operate the full range of applications that are required for mission success.The system study and proto-typing efforts are being conducted with these requirements in mind.

ApproachFour inter-related concepts characterize our top-down systems engineering approach:

The JOPES process defines common decision processes and provides:

This decision-centered approach guided development of displays to support the decision-maker and, subsequently, the algorithms to drive the displays. The latter are based solely on organizing information and presenting it to a user in support of a decision. Reasoning and learning algorithms were formulated to populate the displays. We derived four decision types and found the corresponding classes of decision-making algorithms. Specifically, case-based reasoning is excellent for planning; rules work best for doctrine-based execution; optimal policy is useful for deciding when to update a plan; and belief networks are well-suited to the uncertain reasoning required for situation assessment. We have demonstrated that JOPES is effective in providing these common processes (deliberate planning, mission planning, execution, and assessment) and assuring reuse across mission domains by tailoring our testbed to include numerous mission areas. Mission integration based on acquisition of domain expertise in each of the four areas we addressed produced a meaningful degree of understanding needed to provide useful decision aids in each of the areas. Our experience is likely to be somewhat unique because the underlying research projects were largely unconstrained with regard to which tools to use, what specific databases to use, and what results to obtain. We refined, tailored, and demonstrated innovative decision tools that span the full spectrum of JOPES-based decision-making needs in these areas: situation diagnosis, pattern discovery, strategy determination, and detailed planning.

Where possible we have used freely available software and provided wrappers to provide the funtionality needed for the Decision Aids framework. Most importantly, these include:

The Decision Aids project has resulted in what we are calling the Decision Triade. It consists of the following three legs:

The main purpose of the Decision Aids project is the tailoring of a general-purpose engine to fuse evidence. The center piece of the Deep Belief Network is a evidential reasoning model which provides the decision maker with an estimate of how well the plan is unfolding.

The belief network tool described here provides semi-automated reasoning support for decision makers faced with risky decisions and unknown intents based on assessment information that is inherently uncertain, incomplete, and possibly conflicting. Decision makers often find it difficult to mentally combine evidence - the human tendency is to postpone risky decisions when data is incomplete, jump to conclusions, or refuse to consider conflicting data. Those versed in classical (frequentist) statistics realize it doesn’t apply in situations where evidence is sparse. A data fusion engine is needed.

Data fusion is a difficult, multi-faceted problem with many unsolved challenges. A contributing factor to these challenges is that the problem is ill posed – data fusion means many things to many people. The taxonomy we chose attempts to organize and differentiate mathematical building blocks, correlation schemes, and “true” data fusion methods. Clearly, there are many types of data fusion, and the difference between fusion and correlation is tenuous. At the top are the fundamental building blocks, which are then differentiated into analytical, statistical, and sub-symbolic techniques. Uncertain reasoning, the topic of this paper, is among the statistical approaches.

Related techniques, such as Bayesian Networks and Rough Set Theory, were assessed for applicability. The evidential reasoning approach that we chose relies on the Dempster-Shafer Combination Rule, because it provides intuitive results, differentiates ignorance and disbelief (sometimes described as “skeptical” processing), and performs conflict resolution. Bayesian networks also provide intuitive results, but are better suited to causal reasoning. Rough sets differentiate between what is certain and what is possible and are of potential future interest – a truth maintenance system appears necessary to track the validity of hypotheses as evidence is accumulated.

Insights into the behavior of the belief network were obtained from an analytical study. Mathematical and empirical properties were explored and codified. Discovery of a representation for an identity and its inverse revealed fascinating properties with practical application - e.g., fusion equations are soluble in the same way that matrix equations are.

| Math Properties | Importance |

|---|---|

| Stable | No numerical instabilities (e.g., divide by zero) |

| Symmetric | Belief and disbelief are computed equivalently |

| Bounded | Belief + Ignorance + Disbelief = 1 |

| Commutative | Same result, regardless of fusion order |

| Associative | Same result, regardless of fusion sequence |

| Unit Triade (0,1,0) | Fusion with pure ignorance (0,1,0) unchanged |

| Inverse Exists | Can solve fusion equations |

| Generalizes Bayes | Any Bayesian model can be replicated exactly |

| Conflict Resolution | Explicitly provided based on normalization factor |

| Derived Properties | Importance |

| Scalable | Simple strategies avoid combinatorial explosion |

| Intuitive | Algorithm produces common sense results |

| Comprehensive | Meaningful results produced in all cases |

| Slight Belief is ineffective | Make belief or disbelief either zero or >0.5 |

| Revsersing Trends | Strategies: delete previous belief, add strong disbelief |

| Polarization | Ignorance is quickly reduced in favor of strong belief |

| Network Connectivity | Make a sparse as possible to avoid cascading |

| Link Values | Make link value for belief and disbelief equal |

A novel feature of the evolving Decision Aids implementation is that the user is allowed to override the belief or disbelief associated with a hypothesis (node) and the belief network self-adjusts the appropriate link values – or learns - by instantiating the override. The back-propagation algorithm from artificial neural network research is used to adjust the links. In addition, the belief network constructs explanations of how outcomes are obtained – this is important in risky decision making environments. The work described here has broad applicability to risky decision-making in circumstances where evidence is uncertain, incomplete, possibly conflicting, and arrives asynchronously over time.

The advantages of using Belief Networks as the core offering include:

Belief networks are used to model uncertainty in a domain. The term "belief networks" encompasses a whole range of different but related techniques which deal with reasoning under uncertainty. The basic idea in belief networks is that the problem domain is modeled as a set of nodes (representing concepts) interconnected with arcs (showing relationships between the concepts) to form a directed acyclic graph. Each node represents a random variable, or uncertain quantity, which can take two or more possible values. The arcs signify the existence of direct influences between the linked variables, and the strength of each influence is quantified by a forward conditional probability.Belief networks can be used whenever a classical knowledge based system (KBS) might be used. Belief networks provide the following advantages, when compared with a classical KBS:

A belief network is represented as an acyclic directed graph containing nodes representing a set of mutually exclusive and exhaustive concepts and arcs representing probabilistic relationships between the nodes.

Within a belief network, the belief of each node (the node's conditional probability) is calculated based on observed evidence. Various methods have been developed for evaluating node beliefs and for performing probabilistic inference. However, all these schemes are basically the same -- they provide a mechanism to propagate uncertainty in the belief network, and a formalism to combine (fuse) evidence to determine the belief in a node. A Dempster-Shafer belief network includes node parameters that conform to the Dempster-Shafer combination rule, which is based on an evidential interval - the sum of a belief value, a disbelief value, and an unknown value is equal to one. The Dempster-Shafer Combination Rule for fusion of evidence provides for nodes in a network represented as evidential intervals with values from the set of real numbers (0.0<=n<=1.0). Three parameters specify each node:

The unknown parameter is computed as: U=1-B-D. The Dempster-Shafer Combination Rule is symmetric, bounded, commutative, and associative.

A decision system defines a model for doing probabilistic inference in response to changes in information (evidence) using a belief network. In building a decision system, an agent generally must consider alternative decisions and outcomes, preferences about the possible outcomes, and the uncertain relationships among actions and outcomes. The agent must first determine the variables of the domain. Next, data is accumulated for those variables and an algorithm is applied that creates a belief network from this data. The accumulated data comes from real world instances of the domain. That is, real world instances of decision making in a given field. For some decision-making applications, however, it can be difficult in practice to find sufficient applicable data to construct a viable belief network. Unfortunately, this traditional means of generating a decision system is labor intensive, requiring many hours of labor from an agent knowledgeable about the desired application. In order to simplify and accelerate this process, systems and methods are generally used for semi-automating the generation of a decision system from text. An information extraction component extracts a quantum of evidence and an associated confidence value from a given text segment. An evidence classifier associates each quantum of evidence with one of a plurality of concepts which are used in the building of the belief network.

Automated Discovery of Unkown Unknowns (ADUU)In Belief Network analysis, it is often assumed that derived data (in this case, confidence) can be modeled as being derived from a number of concepts and relationships. However, in complex, real-world situations, it may be that there are a very large number of possible concepts and relationships and that a model may only have knowledge of commonly occurring ones. In this case it can be useful to discover a ‘novel’ (new) concept or relationship, in order to model observations (evidence) that do not correspond to any of the known concepts or relationships. The inclusion of this extra concept or relationship gives a belief network system two potential benefits. Firstly, it is useful to know when novel concepts or relationships are being followed. Secondly, the new concept or relationship provides a measure of confidence for the system. That is, by confidently classifying a concept or relationship as ‘none of the above’, we know that there is some structure in the data which is missing in the model.

The message is that there are no knowns.

There are things we know that we know.

There are known unknowns - that is to say there are things we know we don't know.

But there are also unknown unknowns - things we do not know we don't know.

- Donald Rumsfeld

At this point, we should define some terms in the context of a belief network from the point of view of the network.

Once this process is complete, a “Closed World” decision system exists which can be used in the decision-making process using real world instances of data (evidence) in real-time:

Belief networks work on Closed World assumptions. The analyst has to decide whether absence of data means ‘not’ or ‘unknown’. Clearly not all the possible concepts and relationships between the concepts are known - but we don't even know if they have been investigated or not. There are some necessarily true concepts and relationships that we can make and some possibly true concepts and relationships. But, does absence of information imply that no concepts or relationships exist (Closed World), or does it allow that there might be some that have not been considered or recognized (Open World)? But nothing in the known concepts or relationships precludes any other evolutionary concepts or relationships from also being true (they are just unknown or unrecorded).

In the Open World, there are innumerable true concepts and/or relationships, most of which do not apply in the domain of interest. If we transfer our knowledge to Open World reasoning, we must exclude these other potential truths or restrict which possible other truths we wish to postulate/test. An analyst would interpret the available concepts and relationships and alter his/her assumptions based on the available 'supporting evidence' (the amount, type, and quality of assertions/evidence recorded).

One might choose to ignore possible Open World interpretations (Occam's Razor) and might in some cases assume unknown knowledge is 'negative', but one must also account for the reality that they do not have all the information - they are working in an Open World system. That being the case, it is only logical to go to the Open World to search for new (novel) concepts that are not modeled in our Closed World system. For the purposes of this paper, the Open World will be defined to be the World Wide Web.

The approach is to use automated reasoning and learning algorithms (separately and in combination) to leverage extremely rich structures from a knowledge base by using what is known in the knowledge base (the Closed World) and new evidence to perform a keyword search of the Web (the Open World). These resulting differences (the "unknown unknowns") are of two types: new concepts that might explain a situation and new links that might explain previously unknown relationships between concepts. While humans seem to have this reasoning capability, the automation of this process is more difficult. In fact, until now it has been an unresolved research problem.

ADUU - ExperimentsApplying the basic approach described above resulted in a prototype for the automated discovery of unknown unknowns (ADUU) that depends on the characteristics of the data. The example shown here successfully demonstrates this method in the domain of anti-terrorism.

The knowledge base contains the accumulated evidence to date. Each piece of evidence consists of numerous (in this case, 48) characteristics evolved from what are known as the Reporter’s Questions - Who, What, When, Where, Why, and How. For the purposes of this experiment, the three most important characteristics are ‘who’, ‘where’, and ‘what’. Using ‘who’ = ‘Al-Qaeda’, ‘where’ = ‘Spain’, and ‘what’ = ‘attack’ (all three derived from the information extracted from a text segment), we use these three values in a Google keyword search of the Web. The result is a list of links to articles on the web, including the following link:

http://analysis.threatswatch.org/2007/03/psyops-alqaedas-spanish-litmus/

By feeding this link into a text summarization tool, we find that the goal of the Spain subway bombings was to ‘influence elections’ in Spain since it was ‘politically vulnerable’. These concepts are not included in the anti-terrorism belief network, but probably should be and they should be linked to ‘Overthrow government’. Two new concepts and links that were not previously included in the Closed World belief network (“unknown unknowns”) can now be included in the model.

ADUU - Abductive ReasoningAbductive reasoning is reasoning to the best explanation, but is not guaranteed to provide correct inferences. We use a tool called SUBDUE from the University of Texas. The algorithm produces new unknown nodes and links from structured Reporters Questions data based on machine learning; specifically, mimimum description length (MDL) priniciples.

| Algorithm | Type | Class | Category | PREFERENCE BIAS |

|---|---|---|---|---|

| ADUU | Supervised | Instance Based | Reasoner | Prefers graph-based problems |

| K-Nearest Neighbor | Supervised | Instance Based | Classifier | Prefers distance based problems |

| SVM | Supervised | Probabilistic | Classifier | Prefers binary classification problems |

| Neural Networks | Supervised | Instance Based | Classifier | Prefers binary inputs |

| Hidden Markov | Both | Markovian | Reasoner | Prefers

memoryless and timeseries data |

| Hierarchical Clustering | Unsupervised | Clustering | Reasoner | Prefers grouped data that is in some form of distance |

SUBDUE is a graph-based knowledge discovery system that finds structural, relational patterns in data representing entities and relationships. SUBDUE represents data using a labeled, directed graph in which entities are represented by labeled vertices or subgraphs, and relationships are represented by labeled edges between the entities. SUBDUE uses the minimum description length (MDL) principle to identify patterns that minimize the number of bits needed to describe the input graph after being compressed by the pattern. SUBDUE can perform several learning tasks, including unsupervised learning, supervised learning, clustering and graph grammar learning. SUBDUE has been successfully applied in a number of areas, including bioinformatics, web structure mining, counter-terrorism, social network analysis, aviation and geology.

The three types of machine learning that the Decision Aids use from SUBDUE are:

In graph-based unsupervised learning (Discovery) mode, SUBDUE uses a heuristic search guided by MDL to find patterns minimizing the description length of the entire graph compressed with the pattern. Once a pattern is found, SUBDUE can compress the graph using this pattern and repeat the process on the compressed graph to look for more abstract patterns, possibly defined in terms of previously discovered patterns.

In graph-based supervised learning, two graphs depicting both positive and negative examples of a phenomenon are used by SUBDUE in a supervised-learning mode, searching for a pattern that compresses the positive graphs, but not the negative graphs. For example, given positive graphs describing criminal networks and negative graphs describing normal social networks, SUBDUE can learn patterns distinguishing the two, and these patterns can be used as a predictive model to identify emerging criminal networks.

In graph-based hierarchical clustering mode, the ability of SUBDUE to iteratively discover patterns and compress the graph can be used to generate a clustering of the input graph. Essentially, clustering mode forces SUBDUE to iterate until the input graph can be compressed no further. The resulting patterns form a cluster lattice, such that if a pattern 'S' is defined in terms of one or more previously-discovered patterns, then these patterns are parents of 'S' in the lattice.

| Who (People/Groups) | Reporter Org. | Reporter Affil. | Reporter Desc. | Reporter Name |

| Who (People/Groups) | Enemy Org. | Enemy Affil. | Enemy Desc. | Enemy Name |

| Who (People/Groups) | Ally Org. | Ally Affil. | Ally Desc. | Ally Name |

| Who (People/Groups) | Party Org. | Party Affil. | Party Desc. | Party Name |

| Where (Location) | Region | Nation | Locality | City |

| Where (Location) | Structure | Latitude | Longitude | Altitude |

| Why (Goals/Intent | Strategic | Operational | Action | Task |

| How (Means/Methods | Method | Desc. 1 | Desc. 2 | Desc.3 |

| How (Means/Methods | Causualties | Injuries | Cost | Objective |

| When (Time/Duration | Start Time | End Time | Duration | Time Frame |

| Other | Hypothesis | Belief | Disbelief | Prep. Time |

| Other | Prep. Date | Prep. Name | Mission | Subject |

The Reporters Questions (see table above) data we used is converted into the file format that SUBDUE requires - a list of vertices (nodes) and edges (links). The graph is created as a tree of depth 1, where the top node contains the generic label "Connection". The children of this node hold the field values from the RQ data record and the edges hold the field names. SUBDUE processes this graph by searching for substructures that compress it, performing the commpression, and repeating the process until there are no more substructures that compress the graph.

The following pseudo-code fragment shows how to build the ".g" graph file from the Reporters Questions data:

if (fields[i].select) {

//

// Build the graph vertices for the fields in this record

//

Vn++;

fields[i].Vn = Vn;

fprintf(grafp, "v %d %s\n", Vn, fields[i].value);

}

} // -- end record processing --

//

// Build the graph edges for the fields that are selected in this record

//

for (i=0; i

if (fields[i].select) {

fprintf(grafp, "e %d %d %s\n", "Connection", fields[i].Vn, fields[i].name);

}

}

if (feof(clufp)) break;

}

//

fclose(clufp); cluFileOpen = FALSE;

fclose(grafp);

//

// Run Subdue

// ----------

//

fprintf(stderr, "Doing cluster analysis using graph file %s\n", clu_gfn);

sprintf(clutemp, "Subdue -cluster -truelabel %s", clu_gfn);

irc = exe_command(clutemp, 1);

After generating the compressed graph, which by default is in a ".g" file, it can be viewed graphically with AT&T's freely available GraphViz software using the 'dotty' program. For more detailed information about SUBDUE go to the SUBDUE Web Site. For details about generating SUBDUE graphs, see the SUBDUE Users Manual.

After building the belief network, the next step is to find evidence for the Belief Network. This evidence can come in the form of free-form textual input (i.e.newspaper articles, email, documents, etc), or it can come from well formatted messages, or it can come from voice mail. We decided to use a method of taking information from the input source and converting it into an evidence form called the Reporter’s Questions Template. This evidence template is used as a standardized way of storing the data in a knowledge base. It has fields that accept values that answer a tailored set of Reporter’s Questions (Who, What Where, When, Why, and How). The evidence is used by the the classifier to explain conclusions drawn from the input. Information Extraction (IE) is the process of taking words from the body of text and putting them into a template. Basically information extraction is a way to have the computer answer questions using information from a text. The steps to do IE are: tokenizing, sentence splitting, part of speech tagging, named entity tagging, co-reference, and template filling.

| Absolutely | 1.00 | Actively | 0.78 | Actually | 0.76 | Admittedly | 0.89 | Almost | 0.83 |

| Apparently | 0.93 | Certainly | 1.00 | Characteristically | 0.88 | Already | 0.97 | Conceivably | 0.78 |

| Conceptually | 0.75 | Hardly | 0.23 | Hopefully | 0.43 | Clearly | 1.00 | Conceptually | 0.75 |

| Eventually | 0.89 | Inevitably | 0.67 | Might | 0.52 | Ideally | 0.5 | Impossible | 0.00 |

| Likely | 0.72 | Maybe | 0.54 | Perhaps | 0.4 | Normally | 0.67 | Occasionally | 0.51 |

| Possibly | 0.6 | Predictably | 0.7 | Presumably | 0.45 | Probably | 0.55 | Rarely | 0.23 |

| Strongly | 0.98 | Supposedly | 0.64 | Theoretically | 0.48 | Unlikely | 0.03 | May | 0.20 |

To determine the belief/disbelief of the evidence, we use the concept of hedge words. Hedge words are the words people use within a text to emphasize their degree of belief in a particular fact. The idea is to make a word list of these hedge words and assign a value of belief to each word. Within a given text, hedge words give the program some idea of how the person felt when they wrote the document. The program assigns a value of belief/disbelief to the document. We took the mean value of the hedge words contained within that text. We concluded that one cannot judge the belief of a text solely on the words within the text. When people believe or disbelieve a text, they not only take into account the words, but also the source, their own past experience with the topic of the text, and their belief in the source. In other words, people put their knowledge of the world behind the belief in a given text.

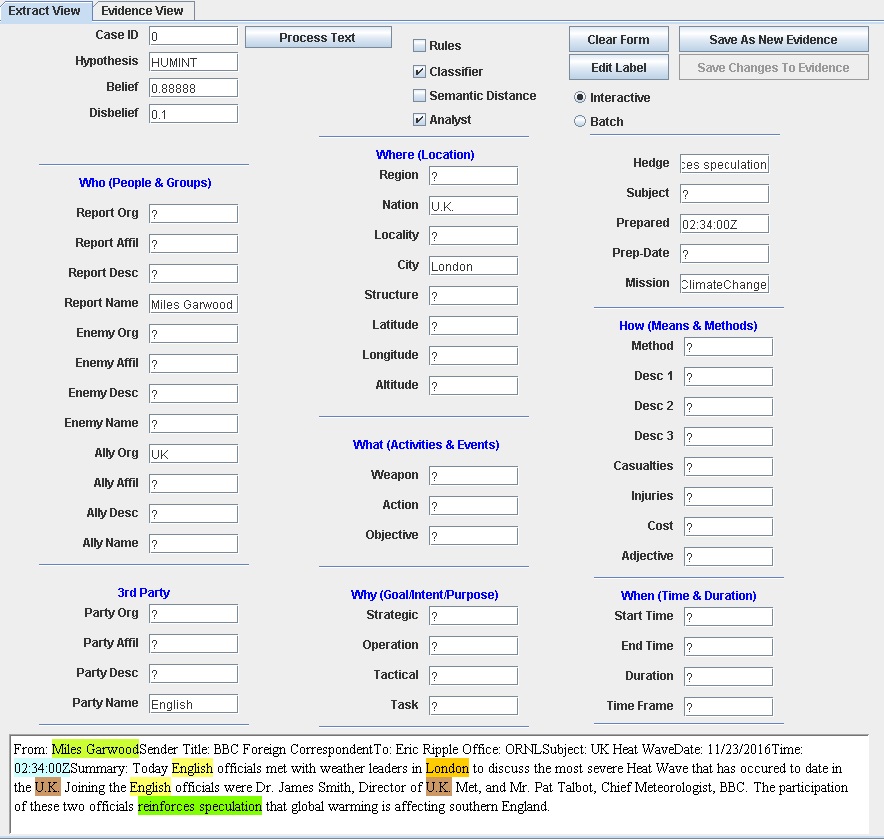

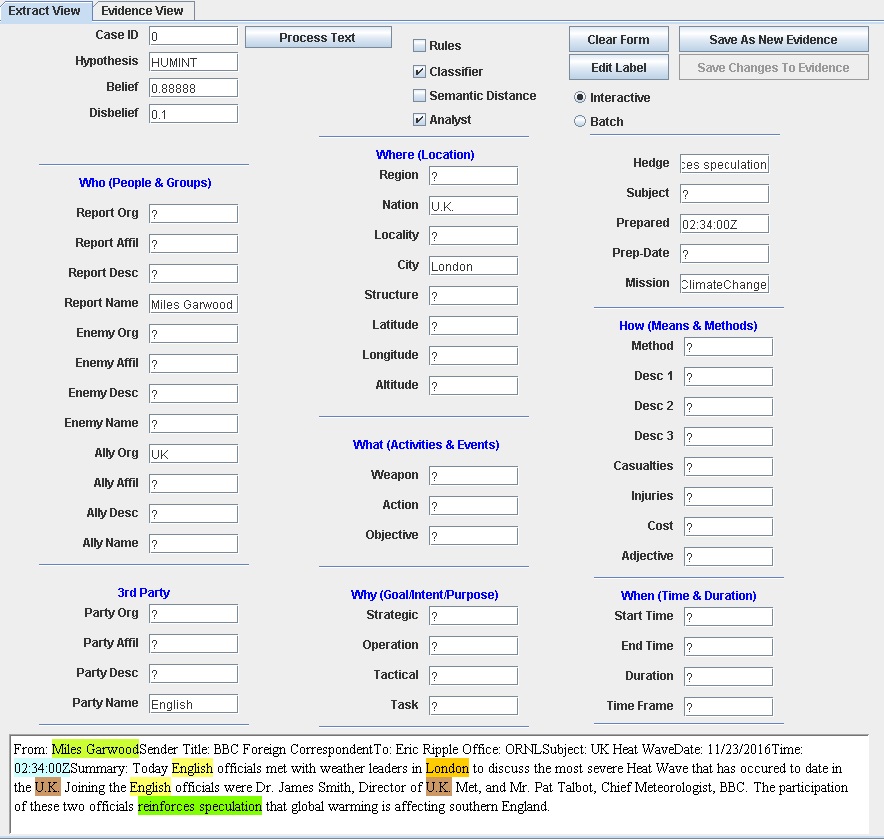

The following screenshot shows the result of extracting information from an example textual input. The result consists of answers to the Reporters Questions (Who, Where, What, Why, How, and When) derived from the text and inserted into the template. This provides the basis for determining the evidence needed for a node in the Belief Network.

After the evidence is extracted, the next step is to associate the extracted evidence with the appropriate Belief Network node. This is done using a classifier which uses the Reporter's Questions data previously stored in the knowledge base as training data to decide which node to apply the evidence to. We have investigated several classifiers during the course of our work. There are numerous classifiers, including:

The most promising for our Decision Aids implementation included: Autoclass, Weka,and semantic distance.

AutoclassAutoClass is an unsupervised Bayesian classification system that seeks a maximum posterior probability classification. Key features include:

AutoClass uses only vector valued data, in which each instance to be classified is represented by a vector of values, each value characterizing some attribute of the instance. Values can be either real numbers, normally representing a measurement of the attribute, or they can be discrete, one of a countable attribute dependent set of such values, normally representing some aspect of the attribute.

It models the data as a mixture of conditionally independent classes. Each class is defined in terms of a probability distribution over the meta-space defined by the attributes. AutoClass uses Gaussian distributions over the real valued attributes, and Bernoulli distributions over the discrete attributes. Default class models are provided.AutoClass finds the set of classes that is maximally probable with respect to the data and model. The output is a set of class descriptions, and partial membership of the instances in the classes.

WekaWeka is a freely available data mining tool from the University of Waikato in New Zealand. It is a collection of machine learning algorithms for data mining tasks. The algorithms can either be applied directly to a dataset or called from your own Java code. Weka contains tools for data pre-processing, classification, regression, clustering, association rules, and visualization. It is also well-suited for developing new machine learning schemes. (Found only on the islands of New Zealand, the Weka is a flightless bird with an inquisitive nature. The name is pronounced like this, and the bird sounds like this.) Weka is open source software issued under the GNU General Public License and it is possible to apply Weka to big data!

Semantic DistanceSemantic distance (or semantic similarity or semantic relatedness) is a metric defined over a set of documents or terms or concepts, where the idea of distance between them is based on the likeness of their meaning or semantic content. These are mathematical tools used to estimate the strength of the semantic relationship between units of language, concepts, or instances, through a numerical description obtained according to the comparison of information supporting their meaning or describing their nature.

Concretely, Semantic distance can be estimated by defining a topological similarity, by using ontologies to define the distance between terms/concepts. For example, a naive metric for the comparison of concepts ordered in a partially ordered set and represented as nodes of a directed acyclic graph (e.g., a taxonomy), would be the shortest-path linking the two concept nodes. (An extensive survey dedicated to the notion of semantic distance and semantic similarity is proposed in: Semantic Measures for the Comparison of Units of Language, Concepts or Entities from Text and Knowledge Base Analysis)